I’ve mentioned in a previous posts about how useful is Security Onion as it is, but for different reasons one might have to tweat it in order to suit his needs. In my case, I had the problem of big drop rates on network packets from Snort and BRO in a temporary production deployment. Since I couldn’t rely on the BRO and Snort logs I would have to generate them again. The good thing was that netsniff-ng had an almost 0 drop rate and I could use the PCAP files for offline analysis.

Main problems when doing offline analysis:

- processing time – having ~650GB of PCAP files to process through BRO and Snort can mean letting the PC do it’s job for several days (of course, depending on the hardware)

- timestamps – the PCAP files had the correct timestamp (as in the time they were captured off the wire) but the issue was with importing them in ELSA, because they were replaced with the testing timestamps (when they were processed into ELSA) and not with the original one

The solutions to the problems were to:

- have some sort of parallel processing – that means having a script or more that would process PCAP files through BRO and Snort, while the logs were imported into ELSA as they were generated

- have appropriate parsing on logs in ELSA – syslog-ng and parserdb have to be configured so that the timestamps are preserverd when importing

Contents

My setup

In order to do this I’ve copied the PCAP files from the production server to a test PC, after a fresh installation of Security Onion. The files were saved according to the default settings in netsniff-ng, which are files of ~150MB arranged into datestamped folders (e.g. 2014-10-01/snort.log.TIMESTAMP). As you probably figured from above, my main interests are generating BRO and Snort logs and properly importing them into ELSA.

For processing and importing I’ve used and tweaked a python script which was posted on the Security Onion google group (please check the references below for that). For log parsing I’ve used a modified syslog-ng.conf and patterndb.xml file which are linked to further down in the post.

Note – the scripts are a bit messy and should be modified according to your environment (as in modifying path variables and other).

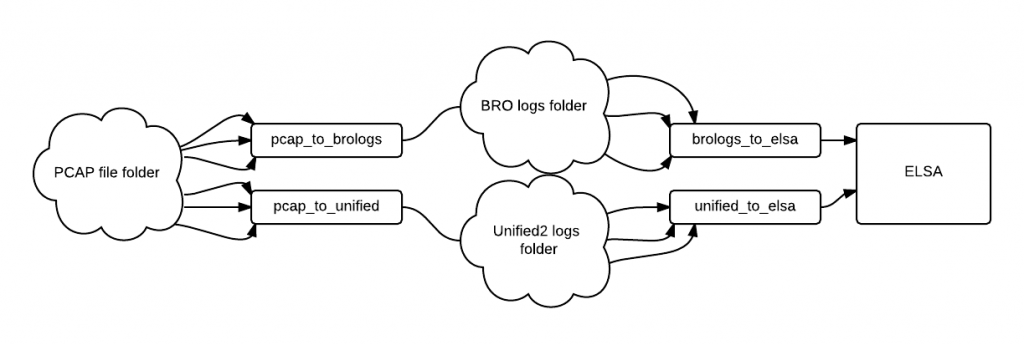

Legend:

- clouds – folders

- small rectangles – scripts

- big rectangle – program

Description:

- each folder has datestamped subdirectories (e.g. 2014-10-01) containing data (pcap files, bro logs, unified2 logs)

- the scripts (or multiple instances of the same scripts, but with little modifications) will process several folders in parallel

From PCAP to BRO logs

BRO can read from PCAP files using the -r switch.

/opt/bro/bin/bro -r /path/to/pcap local.bro

The python script will process a given folder of PCAP files through BRO and will save the logs in a variable defined directory. The script can also check if the next file that is to be processed is newer than the one that has been processed so that it can stop if there no new PCAP files generated. I have not used this “feature” from the original script because I’m using a known number of files for processing and not processing as they are generated. Before using, edit the bro_data_dir and pcap_dir variables accordingly. The to_be_removed variable can be used to exclude certain files from the folder – in case the some of the PCAP files are processed and you only need to process the rest.

Importing BRO logs into ELSA

Prerequisites

- have the correct paths in the import.pl file

- have the appropriate patterndb.xml file

- have the appropriate syslog-ng.conf file

The import.pl script in Security Onion doesn’t have the path updated for the OS, so make sure you update them accordingly, as explained in the Security Onion ELSA post. Same goes for the syslog-ng.conf file.

In ELSA i’ve confronted myself with the problem of proper parsing of the logs. Original timestamps from PCAP files were not preserved. This is a syslog-ng and patterndb issue. The patterndb.xml file (/opt/elsa/node/conf/patterndb.xml) is to be modified so that the timestamps are preserved when parsing. This can be done by using the “pdb_extracted_timestamp” variable as it was discussed in this topic. A copy of the patterndb.xml file that I’ve used can be found here.

Proper importing is defined in the syslog-ng.conf file. After consulting the ELSA google group, I’ve managed to have a configuration file with the appropriate parsing templates, that can be found here.

In order to import logs into ELSA we must use the import.pl script. Example import command:

/opt/elsa/node/import.pl -f “bro” -d “comment” “/path/to/logfile”

The importing to elsa python script goes through a folder with BRO logs and runs the above command on the logs that are defined in the bro_files_to_process variable. Make sure to update the log_dir variable with the appropriate path, and the others if there are more bro log files that you want to process.

From PCAP to unified2 logs

Snort can process pcap files directly using one of the arguments described in the manual. An example of how to do that and how to specify the configuration file from Security Onion and a different DAQ can be seen below:

snort -c /etc/nsm/HOSTNAME-IFACE/snort.conf –pcap-dir /path/to/pcapdir -U -m 112 –daq pcap –daq-mode read-file

The pcap to unified python script will run the above command on a list made from a folder containing PCAP files. The to_be_removed array variable can be filled with pcap files that are to be skipped.

Note – the same snort.conf file should be used as the one in the deployment, so that accuracy is maintained. For more specific modifications, this would include the ip variables related to the monitored network and the appropriate rulesets.

Importing unified2 logs into ELSA

Unified2 logs are being processed by Barnyard2 , as explained in the post regarding how snort alerts are “passing through” Security Onion.

As I’ve stated from the beginning of the article, I wanted to do parallel processing. Barnyard is also included in this, as multiple barnyard2 processes can be work in parallel.

barnyard2 -c /etc/nsm/HOSTNAME-IFACE/barnyard2.conf -d /nsm/sensor_data/HOSTNAME-IFACE/snort -f snort.unified2 -w /etc/nsm/testingso0/barnyard2.waldo-1 -i 1 -U -D

This can be done differently, but the way I did it was to create multiple offline testing interfaces, so that I would run several barynard2 processes, each related to one of them. To make things go faster I have a bash script to do this for me. Before using, adjust the NUMBER variable to the number of interfaces you want to create (and the INAME variable if another name would suit your testing environment). The script creates the test interfaces folders along with the necessary files needed.

After running the script I modified the barnyard2 line above so that it would match the interfaces and I also copied the unified log files to /nsm/sensor_data/HOSTNAME-IFACE/snort (the path can also be changed to where they were initially saved by the pcap to unified script).

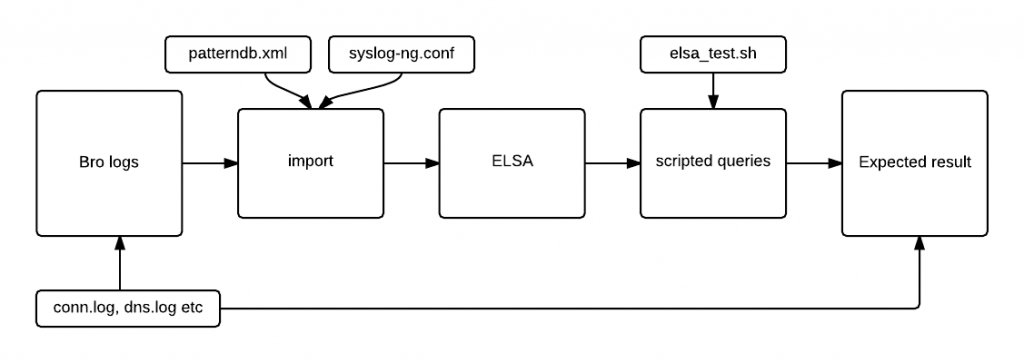

Testing the imported logs

After all the scripts were ran and all the PCAP files were processed I wanted to check if I imported what I expected to be imported.

To check that everything will be working as I want it to. i had to:

- check number of lines in the log files imported

- query ELSA to see if the numbers are the same

Description:

- the bro logs are a small batch of known log files – 11log files X 10 log entries = 110 log entries to be imported. These are samples from conn.log, dns.log and other BRO generated log files. If the numbers are bigger or you do not know for sure how many are there you can simply use wc -l * command to count the number of lines of all the files in the testing logs directory

- the scripted query are command line queries to ELSA that should give me an idea of how the logs are imported. If the logs were imported incorectly they might have ended up in class NONE or they might have ended up imported with the timestamp of 1970-01-01 00:00:00 so the query results will indicate if that happened.

Checking the logs by class with expected date:

perl/opt/elsa/web/cli.pl -q “class=any groupby:class start:’2014-10-01 00:00:00′” -f JSON

Checking the logs by class with unexpected date:

perl /opt/elsa/web/cli.pl -q “class=any groupby:class start:’1970-01-01 00:00:00′” -f JSON

Checking the logs by day to see if logs from separate days were imported:

perl/opt/elsa/web/cli.pl -q “class=any groupby:day start:’2014-10-01 00:00:00′” -f JSON

The total number of logs from the query result should be the same with the number of entries imported. If some logs ended up in the class NONE then there might be a parsing issue.

File links

- can all be found in Resources.

- PCAP to BRO logs

- PCAP to unified2 logs

- BRO logs to ELSA

- maketestsensor.sh

- syslog-ng.conf

- patterndb.xml

References

- https://groups.google.com/forum/#!topic/security-onion/DY4aRVCD1tE

- https://groups.google.com/forum/#!topic/enterprise-log-search-and-archive/-6LwDer4-nk